Building a Visual Search Algorithm

October 13, 2017

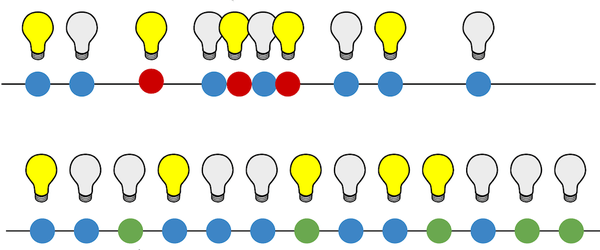

This story, like all great stories, begins with a light fixture. In particular, this light fixture:

Chris's idea of a light that never goes out

Datascope intern, Chris, spotted this light fixture while out at lunch one day and was immediately transfixed by its glowing, orthogonal orbiness. In an attempt to find where he could buy his own wonderful light fixture, he took a picture with his camera and used Google Reverse Image Search to find places that might have the product online. However, while Google was able to show him lots of pictures of light fixtures, it wasn't able to show him the exact product he was looking for. This is partially because Google Reverse Image Search is designed to find similar pictures and not similar items.

This led us to consider what a visual item search might look like and how we might build one. Leveraging some articles on style transfer and auto-encoders we sort-of remembered, we started to think about ways that machine learning could perform this search.

Some quick googling revealed that we weren't the first people to have this idea. Back in March of this year, Pinterest released a beta version of a visual search application called Lens. In June, Wayfair introduced a visual search feature to their app and website. A few days after Chris found the light fixture of his dreams, Amazon announced a "product social discovery network" that's basically Instagram with links directly from photos to Prime pages.

While this effectively crushed our dreams of becoming Silicon Valley billionaires, it gave us confidence that visual search was achievable using existing technologies. Because we were so excited about this idea, we decided to see how much progress we could make in building a visual search engine using publicly available data and open-source code.

So, What Is This Visual Search Thing Anyway?

To build a visual search engine, we need a model that works on images. One of the challenges in dealing with images is high dimensionality. Even a relatively low-resolution 640x480 photograph contains nearly one million data points, making it difficult to use as a direct input to machine learning classifiers. Fortunately, a particular type of neural network that tends to excel at working with and learning from these images is the Convolutional Neural Network, because it is good at encoding local relationships between pixel values in nearby areas of the image. I'll leave a more detailed explanation of Convolutional Neural Networks (CNNs) to someone smarter than me. But to briefly summarize, CNNs use a special mathematical architecture (read: set of rules) called a convolution layer that sweeps a small window over images, drastically reducing their dimensionality while retaining salient features like shapes, colors, and edges. In fact, the most successful CNNs use several convolution layers to create so-called Deep Networks that are extremely successful at visual recognition tasks.

These CNNs have a large number of settings to configure, and they typically require large datasets and a lot of training in order to reach very high accuracy in visual classification. Fortunately for us, many programmers and computer vision researchers have decided to open-source not only deep learning software libraries but also the results of pre-trained models. This allows us to start with state-of-the-art models created by dedicated research teams and then slightly modify them to fit our own particular problem.

Building the Search Engine

While our interest in product search started with a light fixture, we ended up training our model on images of clothing from the Deep Fashion data set. The reason that we chose this data set is because it contains about 300,000 images annotated with category labels (Does the image contain a hoodie? A dress? A t-shirt?), attribute labels (Does this shirt have a particular pattern? How is this dress cut? Does this hoodie have a logo?), and bounding box information for these labels. This bounding box information is very helpful in creating a training set, because it allows us to separate the product from the rest of the image, which is a necessary pre-processing step[1]. The Deep Fashion data set has the advantage of providing us with examples of products in a wide variety of shapes, sizes, colors, patterns, textures, etc. Learning to train a model on the Deep Fashion data set will give us insight on how to extend this method to other items.

With the data set selected, we need to actually build our visual product search algorithm. Lucky for us, the engineering team behind Pinterest's visual search product published a research paper outlining their approach. We can use the results of their work as a blueprint for creating our own visual search.

The core concept behind this method of visual search relies on using the intermediate results of a pre-trained CNN as a vector—a list of numbers representing characteristic features of the input image—and finding products that reduce to similar vectors. The logic behind this is that a CNN trained at visual classification typically has two sections: a series of convolutional layers trained at extracting meaningful visual information from the input image and a series of dense layers trained at performing the classification task. Using the output of the convolutional layers to represent images allows us to perform comparisons in a vector space that corresponds to visual attributes as opposed to semantic attributes (i.e. the predicted image category). Instead of answering the question "Is this a chair?", we can answer the question, "Does this image have similar color/pattern/shading as another image?"

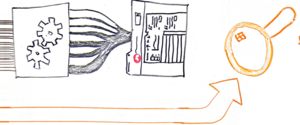

Sketch of the VGG-16 architecture

For this task, we don't need to build a network from scratch and decided to use the VGG-16 network architecture because of its strong performance at visual classification[2]. Getting started with a well-established architecture is almost comically easy thanks to the high-level neural network library Keras. We can directly import the VGG-16 architecture and download the weights from a pre-trained model in one simple step.

from keras.applications import VGG16 model = VGG16(weights='imagenet')

Then, we can create a model that gives us just the output of the first dense (or fully connected) layer and start producing feature vectors.

from keras.applications import imagenet_utils from keras.preprocessing.image import img_to_array from keras.preprocessing.image import load_img from keras.models import Model feature_model = Model(inputs=model.input,outputs=model.get_layer('fc1').output) inputShape = (224, 224) preprocess = imagenet_utils.preprocess_input file = 'path/to/image/file.jpg' image = load_img(file,target_size=inputShape) image = img_to_array(image) image = np.expand_dims(image,axis=0) image = preprocess(image) feature = feature_model.predict(image)

Using the pre-trained weights from VGG-16 gives us pretty good results, but these weights come from the ImageNet challenge, which involves classifying images into 1,000 categories ranging from fire truck to airplane to one of 120 different breeds of dog (seriously). However, for our task, we want to look at small differences between pieces of clothing. To VGG-16, all 80,000 images of a dress might look the same since they fall in the same category, so we need to train our network to learn more subtle differences.

Fortunately, we can retrain our network on the DeepFashion data set while still leveraging the power of pre-trained networks through a technique known as transfer learning. This means that we take a pre-trained network like VGG-16 and re-use the weights in the convolutional layers, but learn completely new weights (and architectures) for the dense classification layers at the end. Again, Keras makes this process very simple for us:

from keras.layers import Dense base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3)) x = base_model.output x = Dense(4096, activation='relu', name='fc1')(x) predictions = Dense(len(classes), activation='softmax',name='predictions')(x) new_model = Model(inputs=base_model.input, outputs=predictions) for layer in base_model.layers: layer.trainable = False new_model.compile(optimizer='Adadelta', loss='sparse_categorical_crossentropy', metrics = ['sparse_categorical_accuracy'])

Here, we have removed the top layers of the VGG-16 network and replaced them with a 4,096-node dense hidden layer[3] and softmax-activated output layer[4], which gives predictions for which of the 50 Deep Fashion categories the image belongs to. We are going to use the output of this dense hidden layer as the feature vector in our product search.

Now that we have our model set up, we just have to train it. Using a cloud computing service like AWS allows us to save both time and money by giving us access to machines with specialized image processing capabilities[5] and preconfigured deep learning software for only 90 cents an hour. With discount prices like these we can't afford to not train this network. After a few hours, the loss started to converge, so we stopped training. Training a neural network is a whole artform unto itself, but since we were just exploring the idea, we didn't want to spend too much time on this step before moving on to testing.

Creating a Map of the Fashion World

The idea behind our product search method is that our CNN takes in an image of a product and puts out a set of coordinates where similar products have nearby coordinates. To see how this idea held up, we wanted to visualize where different products wound up in the coordinate space created by our CNN. However, the coordinates created from our CNN are 4,096-dimensional, which means my monitor is 4,094 dimensions short of being able to properly recreate this space. Instead, we used a popular dimensionality-reduction technique known as t-SNE to create a 2-dimensional representation of our full feature space. Taking a few hundred sample images and plotting them in this 2D map gives us a quick check of how well our feature space organizes similar items. We see that similar items do cluster together as similar products land nearby each other.

Testing Different Models

While t-SNE is an interesting visualization, it doesn’t tell us how well the model performs at the product search task. During development, we ended up with a few different models for visual search we wanted to test: the two different fully connected hidden layers from the pre-trained VGG-16 network (we'll call them FC6 and FC7); the fully connected layer from our retrained model; and the fully connected layer from yet another model trained on a subset of the fashion images only featuring the 22 most common DeepFashion categories. In addition to these separate models, we also had to decide on a metric to measure the distance between feature vectors. For our testing, we limited ourselves to the L1 (or taxicab) distance, the L2 (or Euclidean) distance and the Hamming distance applied to feature vectors that have been turned into 4,096-bit binary strings based on whether or not their value was positive in each dimension. There are certainly more sophisticated metrics we could have employed, but we started out with the simplest choice to see how well they worked.

In evaluating the results of our different models, we aimed to consistently judge the rather subjective question of "do these two things match?" Starting with four different models and three distance metrics, we needed a quick way to identify the most promising models. To do so, we started with a randomly sampled subset of 20,000 images and generated their feature vectors with each of the models. Then, we selected 1,000 images from that set and found their nearest neighbors using each of the three metrics. We evaluated accuracy by testing whether the nearest match belonged to the same category as the source image. For example, if we randomly selected an image of a romper, then we would count the matching algorithm as being accurate if the suggested match is also a romper.

First, we performed a control test and see that randomly selecting pairs from the subset gives us 10.8% accuracy (this is due to the highly imbalanced nature of the category distribution). The off-the-shelf VGG-16 network already beat that benchmark, but the retrained networks gave us an even greater boost in performance, with the network trained on the full set of categories giving us up to 60% accuracy in matching items on this subset.

Three examples of product matches generated by our product matching scheme with the bounding box outlined in red. On the left is a randomly selected image and the closest product as determined by our algorithm on the right.

While this lets us quickly evaluate the performance of our models using the existing image labels, it's not the best way to judge whether or not two items look the same. The category labels we have are relatively coarse-grained; we know if the network can distinguish between a coat and pants, but can it tell the difference between flannel and gingham? Since we are working on solving a task that is inherently subjective, we decided the best way to actually evaluate it was to get human feedback rating its performance.

Learning from People

Having our CNNs do a bunch of math to find pairs of neighboring images is all well and good for machine learning contests, but it doesn’t necessarily tell us which of our models best helps people find similar items. It doesn’t help that “similarity” means different things to different people. Faced with this subjective problem, one way to evaluate our models is to see which one provides the greatest amount of good to the greatest number of people. To that end, we built a website that puts our models head-to-head in a blind test and lets the public be the judge!

If you head on over to Dope or Nope? by Datascope and click on “Play Dope or Nope,” you can participate too! You’ll cycle through random pairs of items that one of seven visual search networks determined to be “nearest neighbors.” If you like the match, swipe right. Otherwise, swipe left. Simple and fun, no? Setting up little games like this is a good way to get quick feedback from the people that matter the most: your users.

Speaking of feedback, we also built in a leaderboard to see how the models stack up against each other. A bunch of Datascopers have voted over the past few days, and here’s how things stand as of press time.

Our visual search networks ranked by number of approvals.

Some thoughts on the results so far: most of the models have a similar performance, hovering somewhere in the 40-45% approval rating, with one model standing above the pack with a ~65% approval rating. This is a model that was retrained on the deep fashion set, uses the FC6 layer features, and finds the nearest neighbors using the Hamming distance. This result is consistent with the findings of the Pinterest paper we based our model on. However, it is hard to know ahead of time which particular combination of features will perform best on your problem, and this is a good example of why you should try lots of models and iterate quickly. Moreover, this is only what we’ve seen so far, and we’re excited for you to be part of the process. Are our networks totally dope? Or do they just make you want to say “...nope?”

For the purposes of this post, we built a gamified voting application to let our visual search models be judged by many people. It’s also easy to imagine how a tool like Dope or Nope could be tailored to help a specific person solve a particular problem. For example, a professional stylist might go to a similar site for inspiration on creative clothing combinations. Or, Dope or Nope might simply help you pick out clothes in the morning, but could periodically retrain its algorithms based on your feedback to better represent your own taste. Not only do these thought experiments demonstrate how well data science dovetails with human-centered design, they also emphasize that machine learning and other forms of artificial intelligence will augment human expertise, capability, and curiosity rather than outright replace them.

What we Learned from Machines/How to Repay the Favor

We wanted to see how difficult it was to build a visual search engine using little more than open-source code, public data, and our own gumption. After a few weeks of hacking, we’re happy to report that many of the requisite steps are actually quite easy. Thanks to libraries like Keras, it was no problem for us to stand on the shoulders of giants and use state-of-the-art networks as a starting point for our work. Retraining these networks to learn specifically about fashion products (instead of the thousands of objects employed in the ImageNet database) was slightly more time and cost intensive, but thanks to the kind souls at AWS, not prohibitively so.

On the other hand, this exploration exposed us to challenges that any aspiring computer vision application would need to overcome. We were lucky to have a data set that had such rich annotations and was relatively uniform in scope and style. Anyone building a product search application from “images in the wild” would likely spend a good deal of time extracting the products that live inside those images (perhaps even training entirely separate networks to do so) and dealing with the wide varieties of angles, background lighting, resolutions, and overall quality of those “wild images.” Sure, there are techniques to account for these variations, but deciding which methods to use isn’t something you get for free. The uniformity of data we worked with was both a blessing and a curse: it made our lives easier, but also imbued our models with bias. If we were building out an actual application, it would need to generalize beyond a database of curated fashion images and work with photos snapped on a phone.

The diversity of images a visual search application would need to handle is matched only by diversity of user preferences it needs to account for. At the end of the day, no standard metric reveals whether your algorithm is doing well by your users: only your users can tell you that. Particularly if you’re geeks like us, it’s sometimes easy to get buried in the computational nuts and bolts of a problem. But because we care primarily about solving problems for people, we’re not shy about getting the results of our tinkering out in front of people as quickly as possible. People like you contributing to little experiments like Dope or Nope will tell us more about what’s working well (or not well) and how we can improve than any array of GPU machines can. In that regard, machines have about as much to learn from us as we have to learn from them. Working carefully, mindfully, and iteratively, we can ride the sometimes bumpy road toward that global optimum off in the sunset.

We could probably automatically create bounding boxes using a network trained in Object Localization, but that's a topic for another blog post. ↩

We considered using architectures such as Inception and ResNet, but decided against them for this project because of their comparative complexity. ↩

Why 4,096? Well, it just happens to be the number of dimensions produced by the convolutional layers in VGG-16. This is an example of one of the many things we didn't have to experiment with and decide for ourselves! ↩

The softmax function is commonly used to turn the output of a neural network's final layer into valid probabilities that sum up to one ↩

Want to try this at home? We used a p2.xlarge instance for this project that has a graphics processing unit (GPU) with 12GB of dedicated RAM. ↩