Hipster Classifier Icebreaker

September 18, 2014

This post is cross-posted on the Metis blog.

There are lots of ways one could imagine kicking off the first day of the first data science bootcamp—there is, afterall, a lot to learn between now and then. The question is, where to start? Should we all get our laptops out and show off our terminal-fu to level-set the class on day one? Should we have a reading from the Book of Bayes to set the tone? Should we practice those ever-important multi-modal communication skills?

We’ll get to those things eventually, but we couldn’t help but get inspired by a different, more important opportunity—the opportunity to develop a Hipster Classifier. Since it was the first day of class and we wanted to use this as an opportunity to know each other, we soon “kept all electronic devices in the off position” and instead developed our Hipster Classifiers using our internal classifiers, namely our brains.

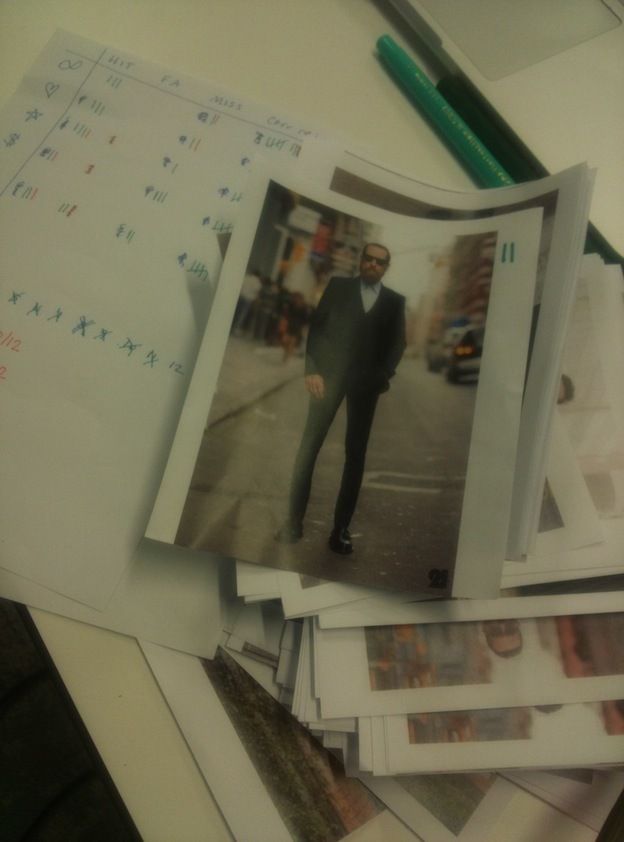

To start, we shared a dossier of 30 photos with the class. For each photo, we took a quick vote in the classroom to decide whether or not the photo was of a hipster. This was useful because it level-set everyone’s definition of what a hipster is (which is difficult even among “experts”) and it allowed us to quickly develop a labeled training set.

We then split into groups of 3 and, for each group, instructed them to build a classifier however they pleased using the 30 photos and their corresponding “hipster” or “not hipster” binary labels. The important thing is that each group’s classifier had to be written on paper (no computers and no calculations beyond simple weighting) and it had to be “objective enough” that anyone—specifically Laurie and Irmak—could use the group’s classifier to make predictions on other photos. If you use slang that others don’t know, the classifier “threw an error,” not unlike software. If you use something subjective like “smug moustache”, “bizarre style” or “infra-trendy jeans”, the reader of your classifier will use their own subjectivity, not the group’s subjectivity. So with that, we got classifiers that looked something like this:

Next, each group took their classifier and, on the honor system, used it to make classifications based on an unlabelled test set of 12 photos. Applying the logic and non-subjectivity that was encoded in their scrap-of-paper classifiers, the groups labeled each picture as either being a “hipster” or “not a hipster”. At the end we revealed which pictures were “true hipsters” and which weren’t[1] and the students tallied up their hits (correctly labeled pictures) and misses (incorrectly labeled pictures) to estimate their accuracy. As you can see in the image below, the classifiers were amazingly good and the top three teams had 10 or 11 correct out of 12; not bad at all!

Obviously, you can’t have an icebreaker like this with any crowd. But the beauty of this little icebreaker is that (i) it let students get to know each other during a fun, engaging and relevant game, (ii) it introduced students to the notion of what it means to build classifiers, (iii) it introduced the notion of training and test sets, and (iv) it introduced the concept of accuracy. In designing any data science curriculum, this seems like a pretty great way to immerse people in quantitative thinking without getting bogged down in the statistical details when the more important thing is to meet your colleagues on the first day.

If you have any ideas for how this game could be reused for a different classification task or other suggestions for how to make this even more fun and engaging, throw your thoughts in the comments! This is an evolving concept and we appreciate any input you might have.

[1] We really struggled with the best way to produce a test set but ultimately settled on the following approach. We laid out the training photos on an axis that ranked the photos based on the number of “yes hipster!” votes they got from the class. Then we set out the test set pictures where they “belonged” on the axis and labeled them based on which side of the hipster threshold they fell. Although this strategy might make your brain twitch a bit, it was the least invalid and most fair way we could think to produce a test set, particularly given the subjectivity of what a “hipster” is in the first place and that experts don’t necessarily agree.